Learn about Accelerating Product Development with Generative AI here.

Generative AI (GenAI) has ushered in a new era in software testing, dramatically enhancing the efficiency, accuracy and speed of the testing process. As software development cycles become increasingly rapid due to agile methodologies and continuous integration/continuous deployment (CI/CD) practices, the role of AI in automating and optimizing software testing is becoming indispensable.

But GenAI is a fast-moving field – with impacts across various facets of software testing, from test case generation to continuous monitoring – and best practices for leveraging this technology are still being defined.

Challenges in Traditional Software Testing

Traditional software testing faces several persistent challenges. Significant manual effort is required for test case generation, execution and maintenance, which is time-consuming and prone to human error. Scaling tests to cover a wide range of scenarios, especially in complex systems, is difficult and resource-intensive. Traditional testing methods struggle to keep up with the fast-paced development cycles, leading to bottlenecks in the CI/CD pipeline. Ensuring comprehensive test coverage is challenging, often resulting in undetected bugs and vulnerabilities. Additionally, the resources required for extensive manual testing can be prohibitively expensive, especially for large-scale projects.

The Role of GenAI in Software Testing

Generative AI addresses these challenges by automating and enhancing various aspects of the software testing process. GenAI can automatically generate a wide range of test cases based on an application's requirements and historical data, ensuring thorough coverage of possible scenarios and reducing the manual effort involved. AI-driven tools can execute test cases faster and more accurately than manual testing, identifying issues that might be missed by human testers, thus accelerating the testing phase and ensuring a higher-quality product. GenAI continuously learns from previous test results and adapts to changes in the codebase, making test maintenance more efficient and reducing the likelihood of outdated or irrelevant test cases.

By analyzing past test data, GenAI can predict which areas of the application are most likely to fail and prioritize testing efforts accordingly. This ensures that critical areas receive the most attention. GenAI tools can perform static code analysis to detect potential issues before they manifest in the application, reducing the number of bugs and vulnerabilities in the codebase. AI-driven dynamic testing tools can simulate real-world conditions and perform security assessments to identify vulnerabilities, ensuring that the application is robust and secure. Post-deployment, GenAI can continuously monitor application system logs for performance issues and provide real-time feedback, allowing for quick remediation of any problems that arise.

Case Study: Enhancing Software Testing with GenAI

GenAI transforms the software testing landscape by automating the creation, execution and analysis of test cases. Here’s a detailed example illustrating how GenAI can handle the following three critical tasks in a typical software development environment.

Imagine a software development team working on a complex e-commerce platform. They need to ensure that new features, bug fixes and updates do not introduce defects and that the platform remains robust and secure. The platform includes various components such as user authentication, product catalog, shopping cart, payment processing and order management.

1. Automated Test Case Generation

GenAI analyzes user stories and requirements documents to understand the functionality needed and leverages historical test data to identify common patterns and areas that frequently fail. Using natural language processing (NLP), GenAI interprets user stories and extracts relevant information. It recognizes patterns in historical data to identify critical areas for testing and automatically generates test cases covering a wide range of scenarios, including edge cases, boundary conditions and typical user interactions.

For example, for a user authentication feature, GenAI generates test cases such as a successful log in with valid credentials, a failed login with invalid credentials, a password recovery process and account lockout after multiple failed attempts.

2. Enhanced Test Execution

In preparation for test execution, GenAI configures the testing environment, ensuring it mirrors the production environment. It generates necessary test data, including user accounts, product listings and transaction records. GenAI then executes test cases in parallel, significantly reducing the time required for testing. It dynamically adjusts test parameters based on real-time feedback and test outcomes. For instance, GenAI runs login tests on multiple browsers and devices simultaneously, ensuring compatibility and responsiveness across different platforms.

3. Analyzing Test Results

During test execution, GenAI collects logs, performance metrics and error messages. It analyzes the behavior of the application under various conditions, identifying anomalies and performance bottlenecks. Using machine learning algorithms, GenAI identifies patterns in the test results that indicate defects and performs root cause analysis to determine the underlying issues causing the defects. It assesses the impact of detected defects on the overall application functionality and user experience.

For example, for the login tests, GenAI identifies a pattern of failed logins due to a recently introduced bug in the authentication module. It traces the issue back to a specific code change and assesses its impact on user access. GenAI generates detailed bug reports, including steps to reproduce, logs, screenshots and suggested fixes, and provides recommendations for optimizing performance and improving code quality. A typical bug report might state: "Failed login with valid credentials on Safari browser due to incorrect token handling in the authentication module. Suggested fix: Update token validation logic."

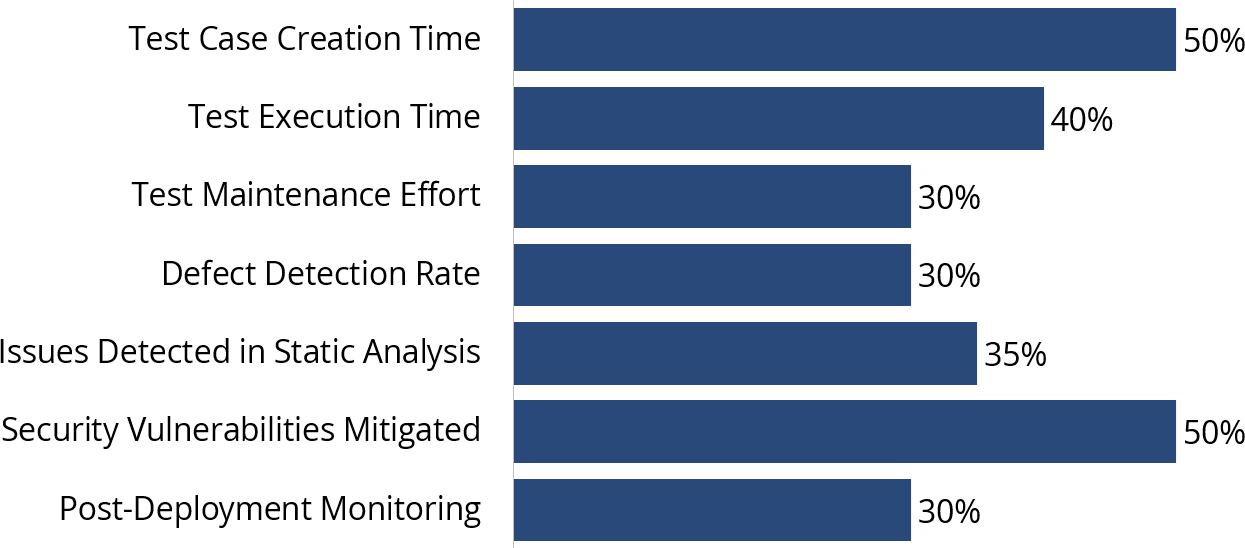

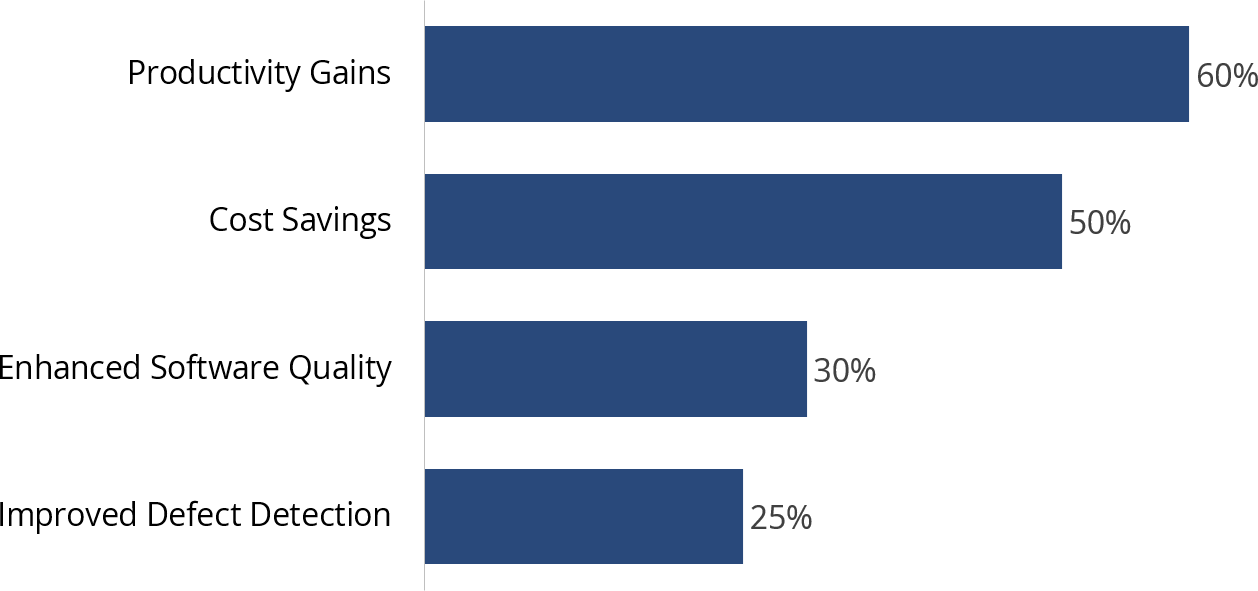

Percent Productivity Improvement w/Gen AI

Source: ISG Research May 2024

Improving Software Quality Metrics with GenAI

Consider a large e-commerce platform that relies on a complex web of applications to provide seamless user experiences. By integrating GenAI into their software testing processes, the company achieved significant improvements. The time required for test case creation was reduced by 50%, ensuring comprehensive coverage of various user scenarios. Test execution time decreased by 40%, allowing for more frequent releases and quicker bug fixes. Enhanced test case relevance and accuracy through continuous learning reduced maintenance efforts by 30%. Focused testing efforts on high-risk areas improved defect detection rates by 25%. During the static analysis phase, 35% more issues were detected and resolved, resulting in a more stable codebase. Security vulnerabilities were identified and mitigated faster, enhancing the overall security posture of the platform. Improved post-deployment monitoring allowed for immediate identification and resolution of performance issues.

Percent Gains with GenAI Integrated across the Testing Lifecycle

Best Practices for Leveraging GenAI in Software Testing

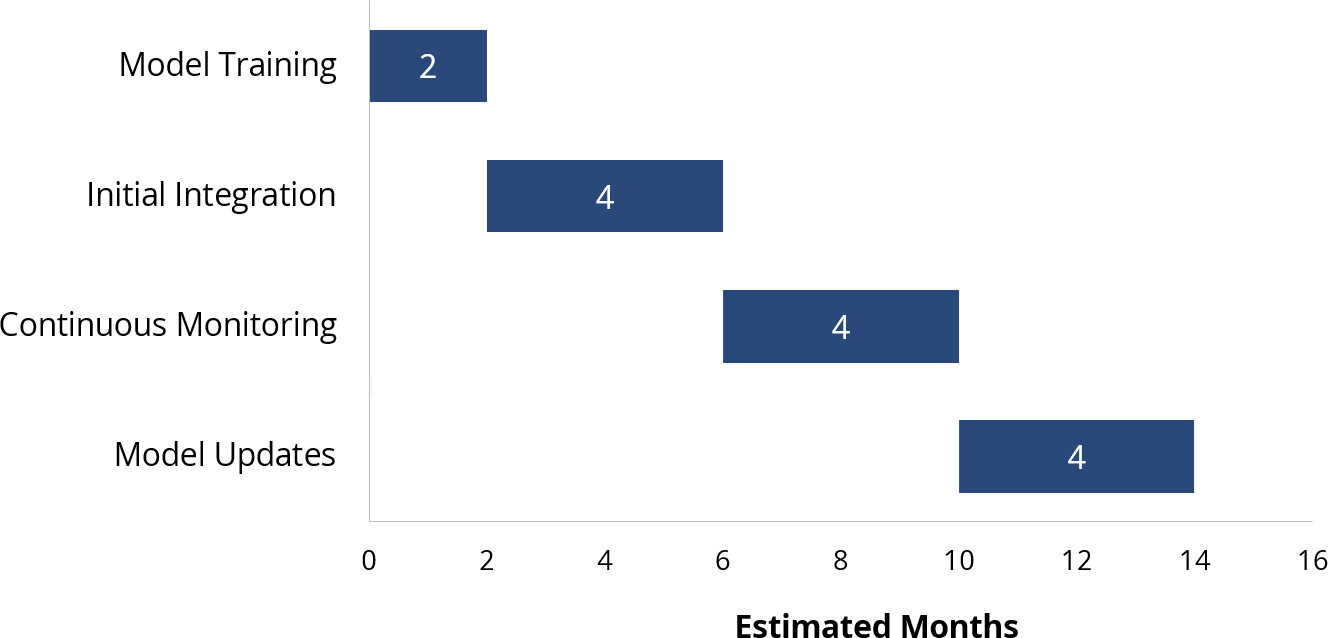

To maximize the benefits of GenAI in software testing, organizations should integrate GenAI tools early in the development process to catch issues sooner and streamline the testing workflow. It is essential to continuously update the AI models used for generating and optimizing test cases with new data to maintain their accuracy and relevance.

Combining AI with manual testing allows GenAI to handle repetitive and time-consuming tasks while reserving manual testing for exploratory testing and areas where human intuition is crucial. Investing in training for QA teams to effectively use and manage AI-driven testing tools ensures a smooth transition and maximizes the technology's potential. Organizations should continuously monitor the performance of AI-driven testing processes and make adjustments as needed to address any emerging challenges or changes in the application.

Typical Journey to Integrate GenAI into Testing Lifecycle

Source: ISG Research May 2024

Future Trends and Innovations in AI-Driven Software Testing

As the field of AI continues to evolve, several trends and innovations are expected to shape the future of AI-driven software testing:

- Increased adoption of AI-powered testing tools: Adoption of AI-powered testing tools is anticipated to grow significantly. More and more small and medium-sized enterprises are likely to integrate AI into mainstream testing frameworks and leverage the benefits of AI in their testing processes.

- Advancements in autonomous testing: Autonomous testing, in which AI systems independently design, execute and analyze tests without human intervention, will see major advancements. This will further reduce the need for manual oversight and enhance the efficiency and accuracy of the testing process.

- Enhanced predictive analytics: AI systems will increasingly use predictive analytics to forecast potential issues and areas of concern in software applications. This proactive approach will allow development teams to address problems before they impact end users, resulting in more stable and reliable software.

- Integration with DevOps and CI/CD pipelines: The integration of AI-driven testing tools with DevOps and CI/CD pipelines will become more seamless. This will facilitate continuous testing and monitoring, ensuring that software quality is maintained throughout the development lifecycle and beyond.

- Greater emphasis on security testing: AI will play a pivotal role in enhancing security testing. AI-driven tools will be able to identify vulnerabilities more effectively and simulate sophisticated cyberattacks to test the resilience of applications. This will be crucial in an era where cybersecurity threats are becoming increasingly complex.

- AI-enhanced user-experience testing: AI will be leveraged to enhance user experience testing by simulating real user interactions and providing insights into how users engage with software applications. This will help developers optimize the user interface and overall user experience.

- Collaboration between AI and human testers: While AI will automate many aspects of software testing, the collaboration between AI and human testers will remain essential. Human testers will focus on exploratory testing and areas requiring creativity and intuition, while AI handles repetitive and data-intensive tasks.

GenAI is transforming the landscape of software testing by automating key processes, enhancing test accuracy and accelerating testing cycles. By leveraging GenAI, organizations can achieve significant improvements in productivity, cost savings and software quality. As the technology continues to evolve, its impact on software testing will only grow, making it an indispensable tool for modern software development.

Explore How GenAI Can Accelerate Mainframe Modernization here.