If someone forwarded you this briefing, consider subscribing here.

ARTIFICIAL, INTELLIGENT ADVERSARIES?

It is well known that there is a continuous arms race in cybersecurity. Criminals and bad actors deploy new technologies and exploit vulnerabilities to gain access to systems and further their objectives. Enterprise security organizations struggle to respond quickly enough to attacks and to predict new avenues for exploits.

One particular challenge is the fact that motivated attackers can be on the bleeding edge of technology adoption in a way that legacy-estate-encumbered enterprises cannot. In fact, in ISG’s recent Cybersecurity Buyer Behavior study, infrastructure complexity and legacy equipment and applications rank as the top two organizational challenges impacting enterprise cybersecurity performance.

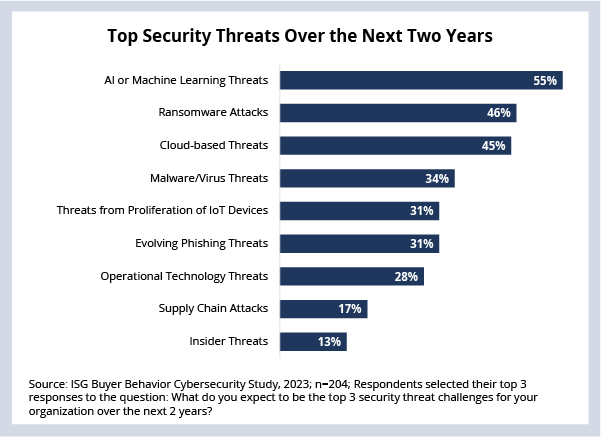

But enterprise security leaders aren't focused solely on reacting. When asked to look ahead at their biggest threats for the next two years, 56% answered that AI and machine learning (ML) threats will be their top challenge (see Data Watch). What makes this interesting is that, according to our other studies, AI and ML solutions are still mostly in pilot stages in large enterprises, with very limited and narrow production use cases.

The challenge is clear: AI tools like ChatGPT and other open-source, general-purpose language models can go into production writing phishing emails today. Large language models (LLMs) have already proven to be excellent at imitating various writing styles, modeling language idioms and even supporting natural-sounding translations – essentially making it easier to generate increasingly effective adversarial or malicious content.

Of those enterprises that participated in our study, 95% report at least one cybersecurity incident in the last 12 months, and 80% report a phishing or social engineering attack. Bad actors benefit from rapid prototyping and worry-free adoption of new technologies that impersonate people, while enterprises are left to respond in the short term with attempts at better training and prevention.

It is doubtless that, in the long run, AI itself will be employed to fight back, but for now, it is clear that AI is likely to be our enemy before it is our friend.

DATA WATCH